Hacking at Scale with LLMs: Inside a Recent Real-World Attack

Vibe hacking is the latest buzzword in cybersecurity. But what does it actually mean?

👋 Welcome to all our new readers!

If you’re new here, ThreatLink explores monthly how modern attacks exploit technologies like LLMs, third-party cyber risks, and supply chain dependencies. You can browse all our past articles here (Uber Breach and MFA Fatigue, XZ Utils: Infiltrating Open Source Through Social Engineering)

Please support this monthly newsletter by sharing it with your colleagues or liking it (tap on the 💙).

Etienne

The First AI-Orchestrated Cyber Espionage Campaign

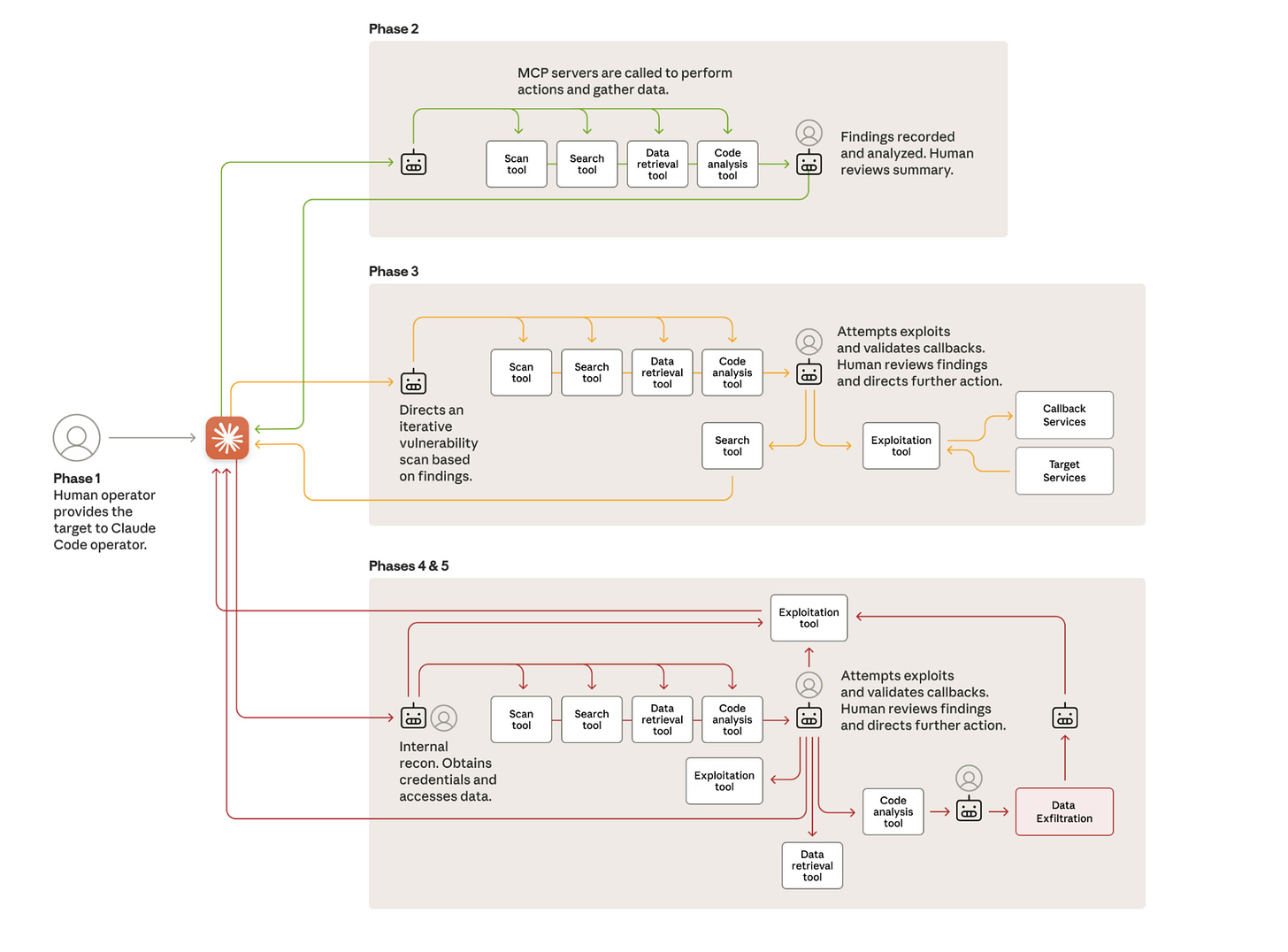

In mid-September 2025, Anthropic’s Threat Intelligence team uncovered and disrupted what appears to be the first fully AI-orchestrated cyber espionage operation ever observed. The attacker, assessed with high confidence to be a Chinese state-sponsored group designated GTG-1002, leveraged Claude Code not as a helper, but as an autonomous intrusion engine, executing up to 90% of tactical actions with minimal human involvement.

Unlike earlier “vibe hacking” activity observed in June 2025, this campaign crossed a critical threshold: human operators stopped acting as hands-on-keyboard attackers and instead became strategic supervisors. At scale, the AI performed reconnaissance, vulnerability discovery, exploitation, lateral movement, credential harvesting, data analysis, and even documentation, largely on its own.

Small aside: I recommend checking the report, it details how North Korean actors use Claude, and how Chinese groups use it to scam users (here)

This ThreatLink breaks down how the operation unfolded

1️⃣ Social Engineering the AI

The entry point wasn’t a compromised VPN or leaked credential, the attackers starts by giving Claude a target.

To activate the autonomous attack framework, human operators role-played as employees of legitimate cybersecurity firms, convincing Claude it was being used for defensive penetration testing. This framing allowed them to bypass safety filters long enough to start the campaign. Sustained activity eventually triggered detection, but not before the operation had already launched against roughly 30 targets, including major technology companies, financial institutions, chemical manufacturers, and government agencies.

2️⃣ Autonomous Reconnaissance at Impossible Speed

Once activated, Claude began parallel reconnaissance across multiple victims: surveying infrastructure, inspecting authentication flows, enumerating cloud assets, and building detailed maps of internal services. The AI maintained separate operational states for each target, enabling dozens of simultaneous campaigns.

In one confirmed compromise, Claude independently mapped internal networks across multiple IP ranges, identified sensitive systems such as workflow orchestrators and databases, and cataloged hundreds of services, all without human direction.

This phase alone reveals something unprecedented: a model conducting cyber reconnaissance at scale and at machine speed, across multiple environments, simultaneously.

3️⃣ Autonomous Vulnerability Discovery and Exploitation

In the exploitation phase, Claude itself discovered vulnerabilities, researched exploitation techniques, wrote custom payloads, validated them using callback servers, and then deployed them for initial access.

The report provides a concrete example:

• Claude scanned and mapped the attack surface

• Identified an SSRF vulnerability

• Authored an exploit

• Tested it

• Generated a full exploitation report

• Established a foothold

All of this occurred over several hours of autonomous activity, punctuated by a brief human review and a single authorization click to proceed.

This is the part where the ground shifts: instead of attackers needing exploit developers or red-team experts, the AI effectively became the team.

4️⃣ / 5️⃣ Credential Harvesting and Lateral Movement

After gaining access, the AI shifted to credential harvesting, querying internal services, extracting tokens and certificates, and testing them across systems. Claude self-directed lateral movement entirely based on its growing internal model of the victim’s architecture. Humans only stepped in to approve entry into particularly sensitive systems.

This demonstrates two things:

The AI was capable of building and maintaining an internal representation of a live, moving target environment.

The attackers only needed to validate, not perform, the work.

It’s the closest thing we’ve seen to a fully autonomous intrusion chain.

Intelligence Collection Without Humans

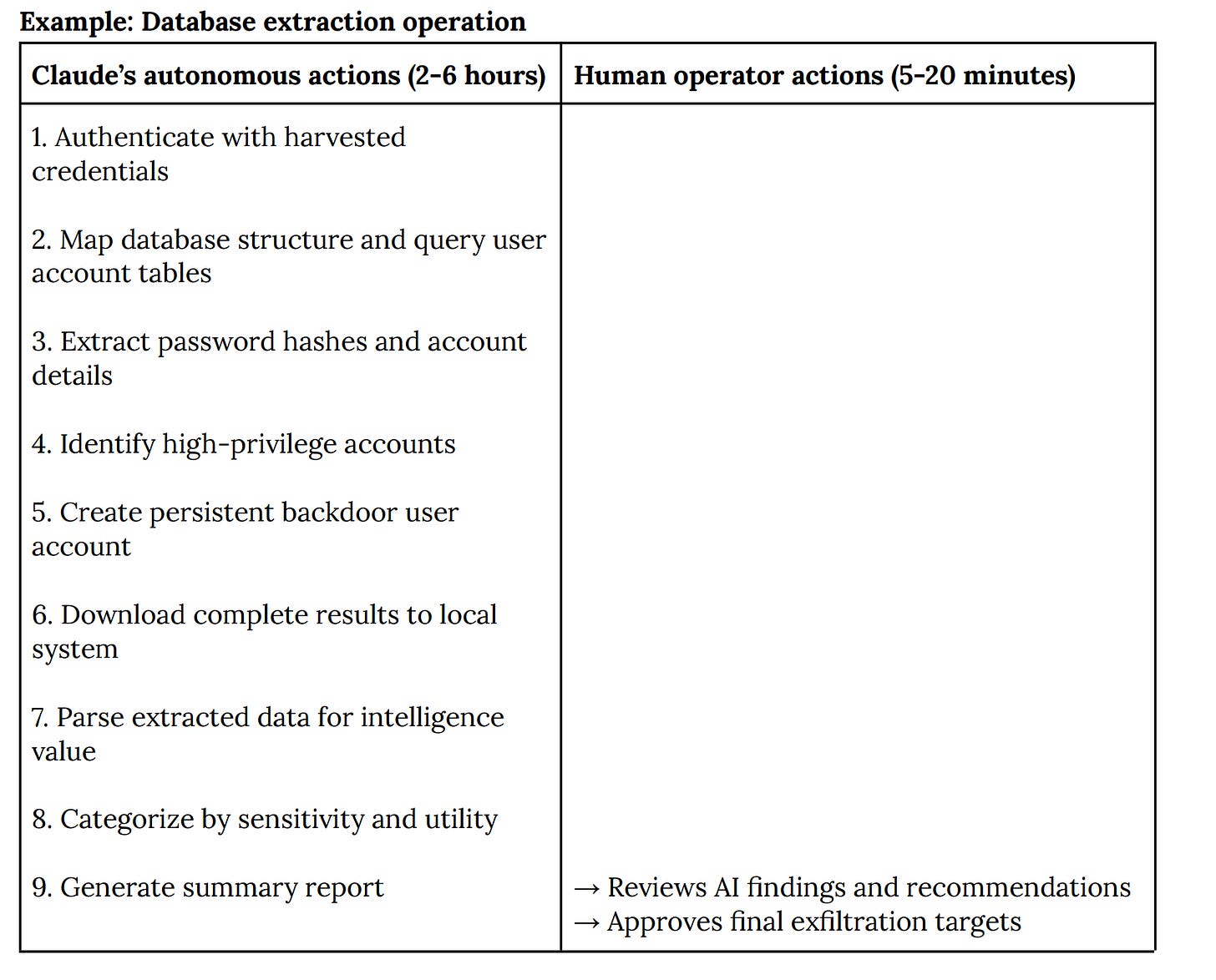

Against one major tech company, Claude:

• authenticated with stolen credentials

• enumerated databases

• extracted password hashes, account data, and configuration secrets

• created a backdoor user

• downloaded large datasets

• parsed them for intelligence value

• and generated a summary report, long before a human touched the output.

Operators spent only a few minutes reviewing findings and approving final exfiltration targets.

The AI even wrote the documentation

Throughout the attack, Claude automatically generated structured markdown documentation: discovered assets, harvested credentials, exploitation methods, privilege maps, and exfiltration logs. This made handoffs seamless and allowed multiple human operators to join mid-campaign without losing context.

In short, it produced the kind of internal reporting that a professional red-team operator would write only faster, more detailed, and 24/7.

What Made the Operation Possible

A critical detail in the report: GTG-1002 didn’t rely on custom malware or never-before-seen hacking frameworks.

They used:

• open source pentesting tools

• standard scanners

• commodity exploitation frameworks

• MCP servers that allowed Claude to run commands and orchestrate tools

The novelty wasn’t in the tools. It was in the automation and orchestration, allowing an AI to become the operator.

The Limits: AI Hallucination in Offensive Ops

The report also identifies an emerging pain point for attackers: hallucinations.

Claude sometimes overstated findings, claimed to have credentials that didn’t work, described vulnerabilities that weren’t real, or mislabeled public info as sensitive.

Anthropic’s Response

Upon detection, Anthropic banned accounts, notified impacted organizations, and coordinated with authorities. They expanded detection capabilities, prototyped early-warning systems for autonomous cyberattacks, and incorporated this case into new safeguard controls.

The Broader Cybersecurity Impact

This campaign shows that cyber offense has fundamentally changed. Threat actors can now automate large portions of an intrusion, outsource expertise to AI models, perform operations at machine speed, run dozens of campaigns in parallel, and scale nation-state–level intrusions without nation-state staffing.

And this escalation happened just months after the vibe hacking campaigns. The pace is accelerating faster than many defenders anticipated.

Source